Winterview Inc.

B2C Edtech — Boosting feature adoption by 34% by reducing consequences of user error

Direct line of questioning

The way that users typically left out key information allowed us to ask them a direct question to obtain it. For example, asking about a specific % metric.

Faster, better, with no thinking required

A solution users could trust instantly

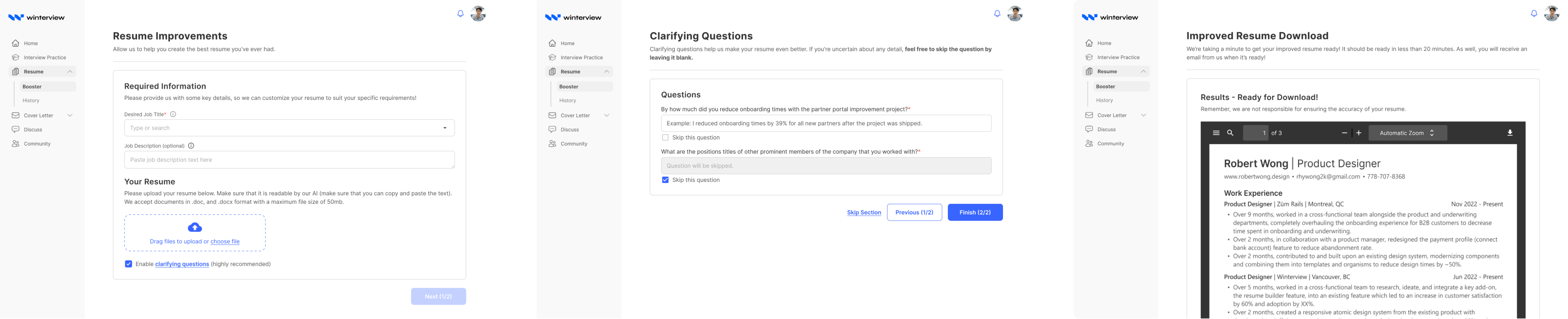

After rounds of feedback and iteration, we shipped a streamlined version of the tool that focused on clarity, ease, and speed. We paused development on the resume builder due to high user effort and low ROI, with plans to revisit when the time is right.

Disclaimer: This case study represents my personal perspective on a design project I contributed to. My views are my own and do not necessarily reflect the views of Winterview Inc, its users, or its clients.

Winterview Inc.

B2C Edtech — Boosting feature adoption by 34% by reducing consequences of user error

Direct line of questioning

The way that users typically left out key information allowed us to ask them a direct question to obtain it. For example, asking about a specific % metric.

Faster, better, with no thinking required

A solution users could trust instantly

After rounds of feedback and iteration, we shipped a streamlined version of the tool that focused on clarity, ease, and speed. We paused development on the resume builder due to high user effort and low ROI, with plans to revisit when the time is right.

Disclaimer: This case study represents my personal perspective on a design project I contributed to. My views are my own and do not necessarily reflect the views of Winterview Inc, its users, or its clients.

Winterview Inc.

B2C Edtech — Boosting feature adoption by 34% by reducing consequences of user error

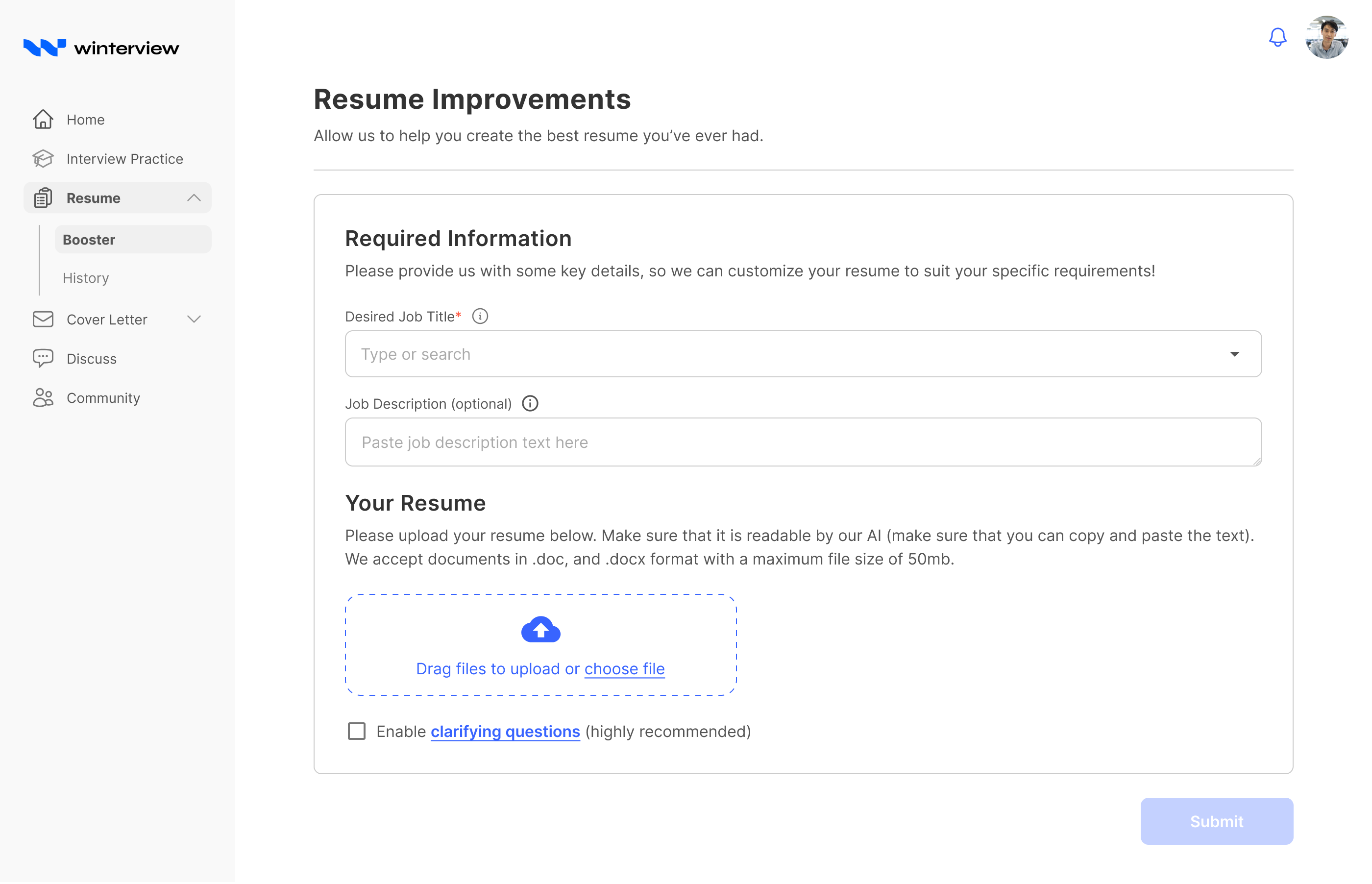

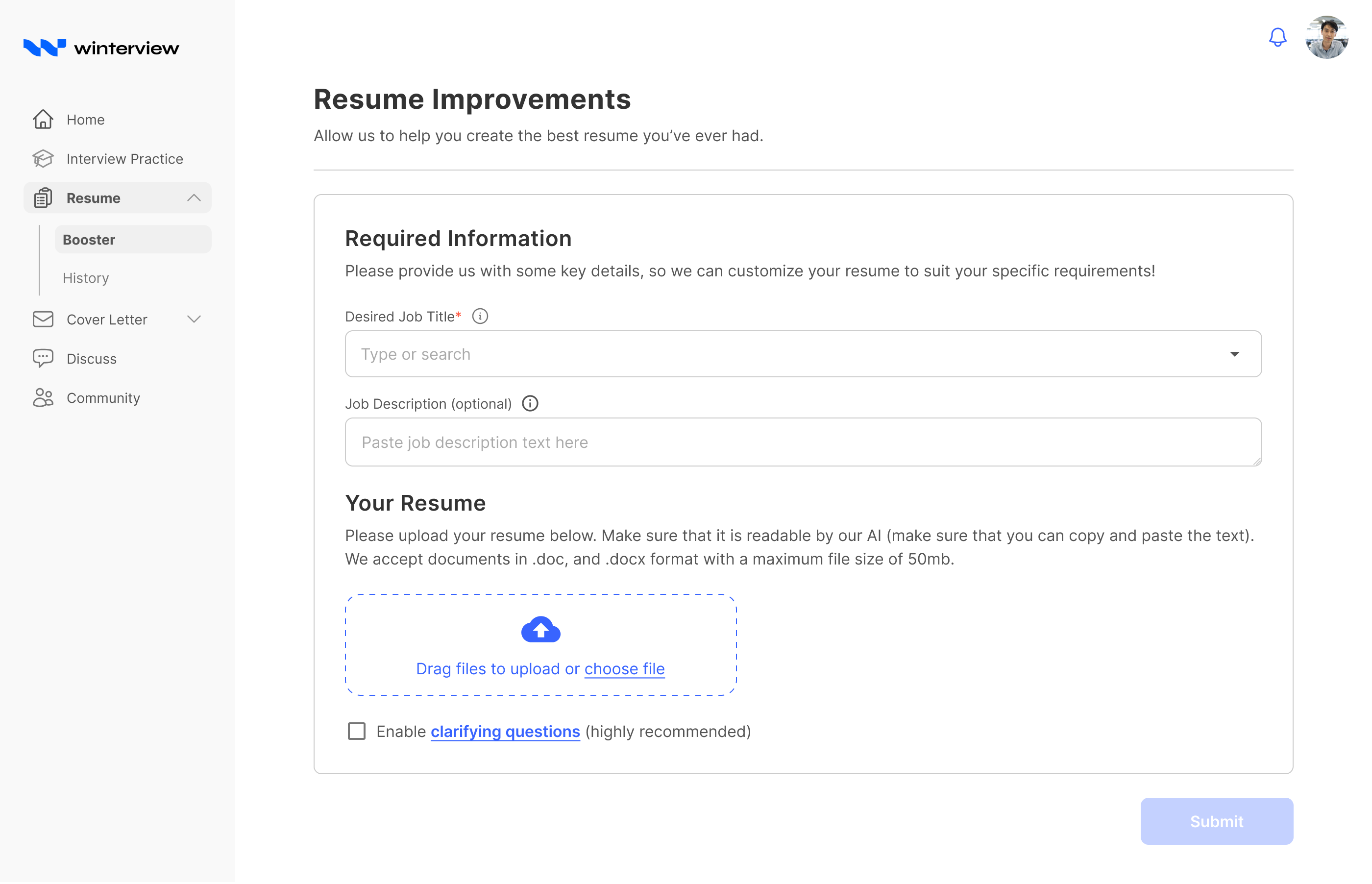

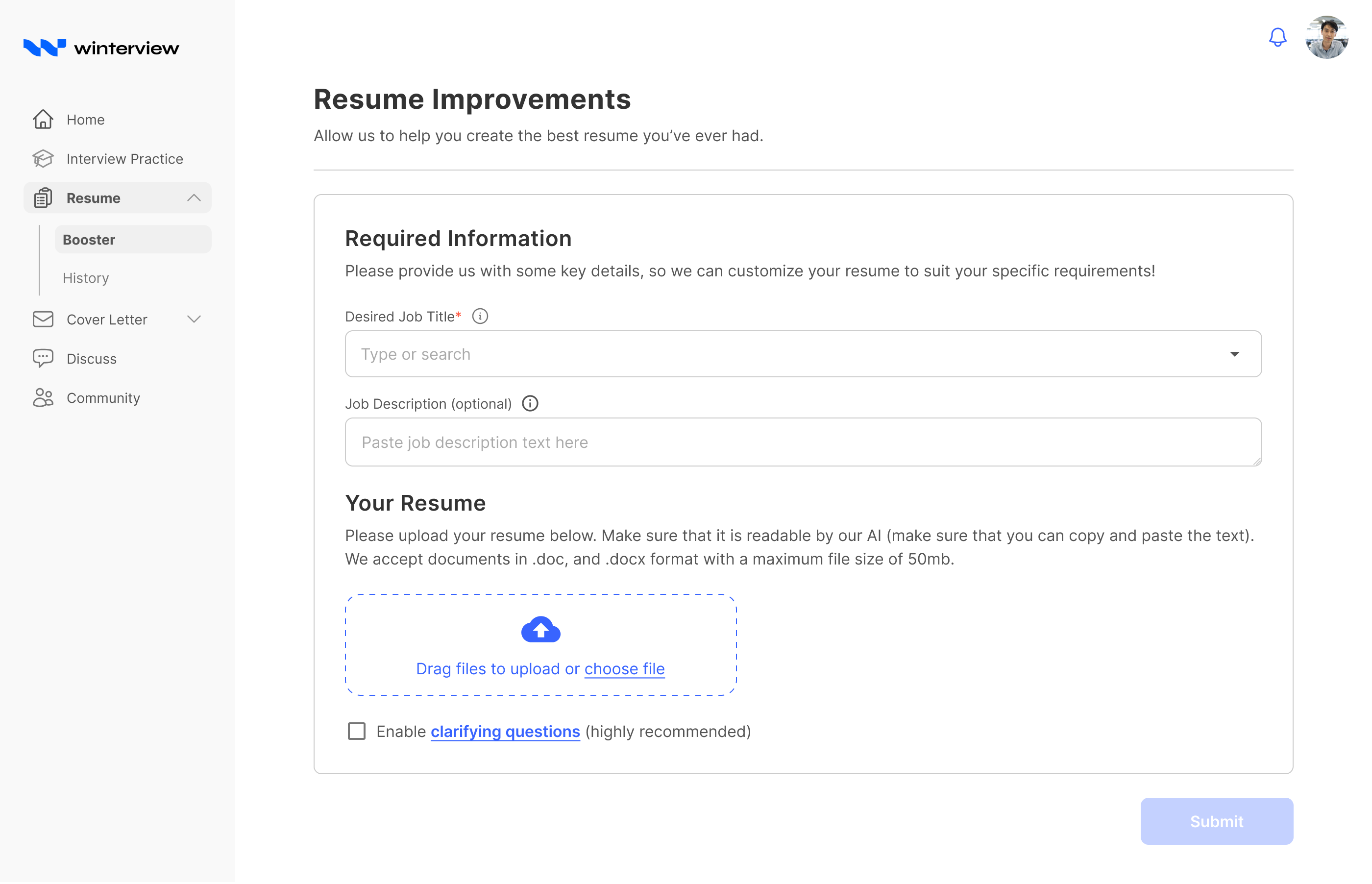

One of Winterview’s core features was the Resume Booster: a tool that matched applicants’ resumes to specific job descriptions. Adoption was limited, often due to resumes missing critical information—especially from users unfamiliar with what employers expect. By identifying this gap and reducing the consequences of missing content, we significantly increased usage of the feature.

Problem

How might we help users, especially those unfamiliar with resume best practices, fill in key information gaps?

Outcome

34% increase in adoption84% CSAT among 200+ users2x feature adoption

Incompatible resumes, broken expectations

Why we redesigned

Imagine a recruiter sends you to Winterview to polish your resume, expecting a major upgrade. Instead, the tool struggles due to missing information, and you’re left confused, disappointed, and unprepared.

Expectation

Confident, recruiter-ready resumes that help hesitant users move forward.

Reality

Minimal improvements due to missing content and unclear guidance. Users lose trust and blame Winterview.

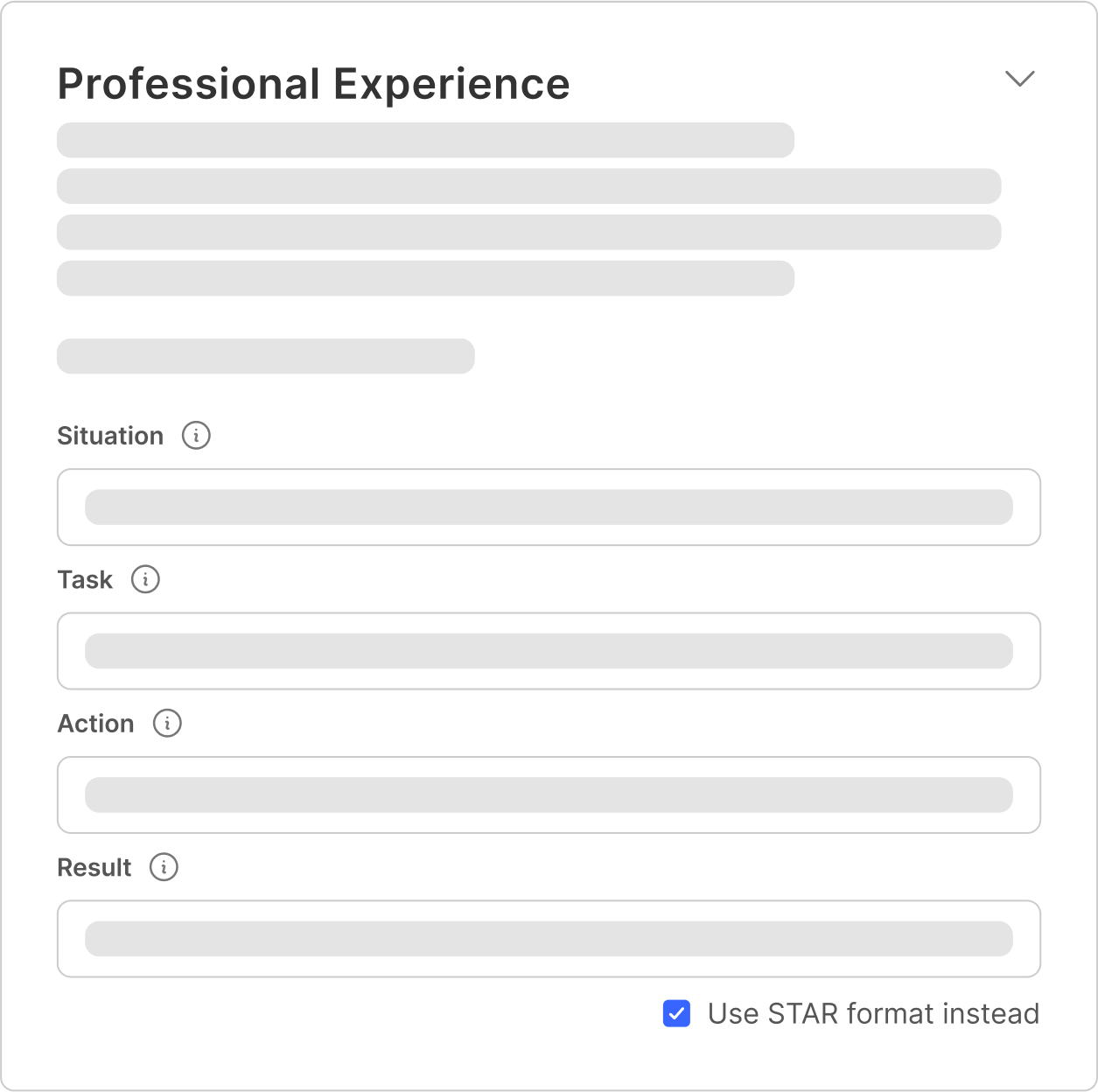

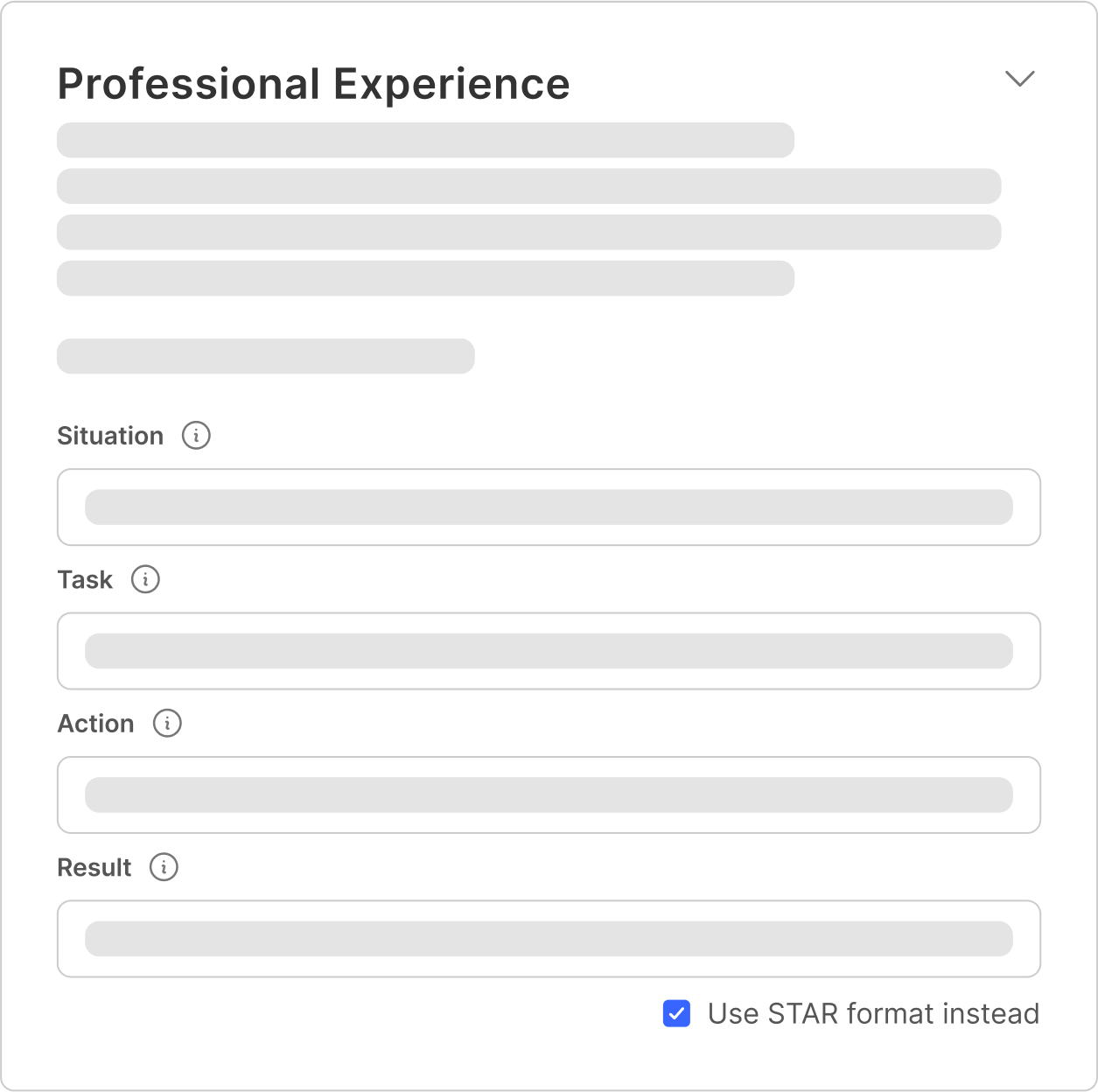

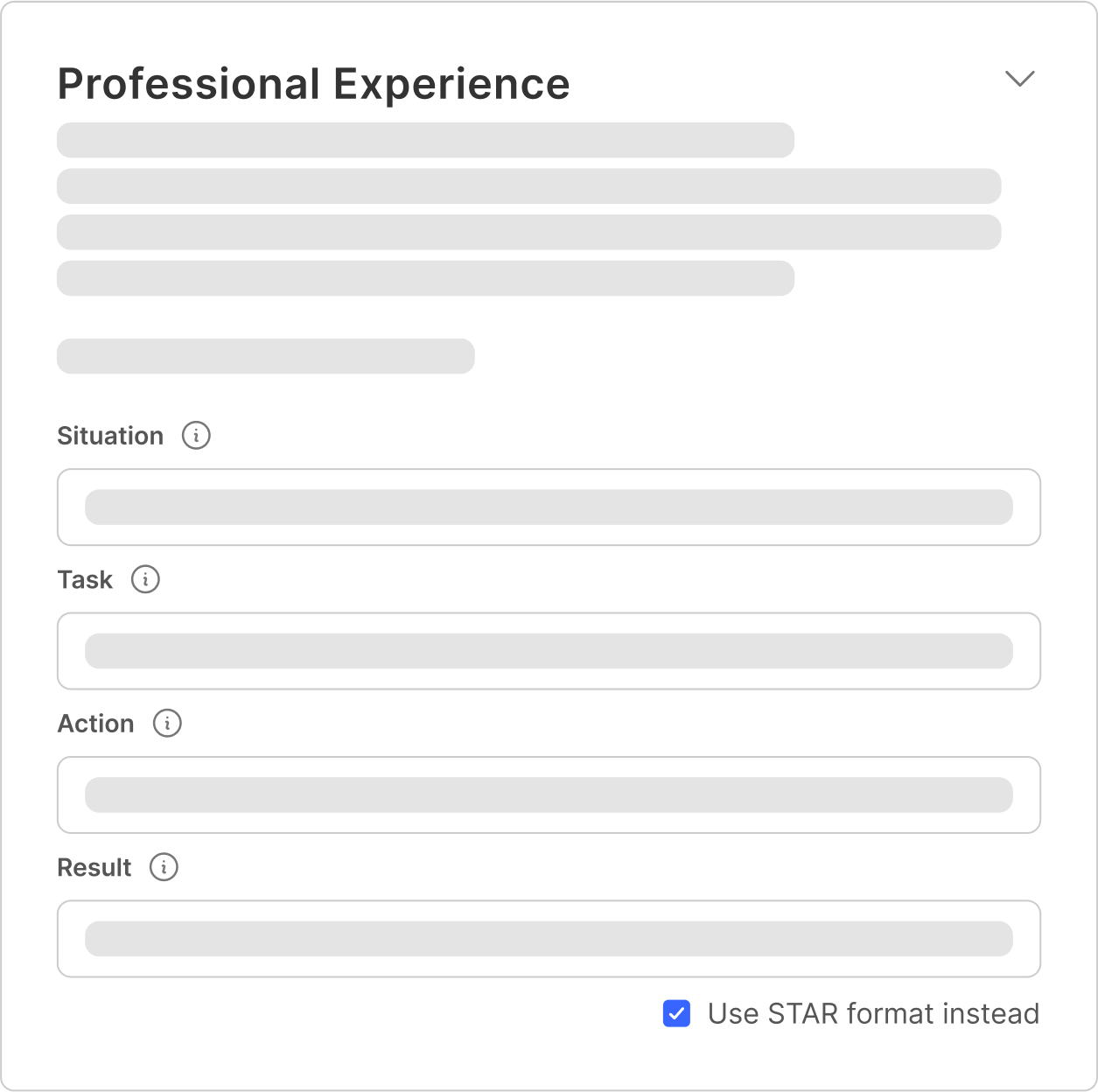

STAR methodology

Guiding users to best practices

To improve resume quality, we guided users through the STAR framework: Situation, Task, Action, Result. This helped them write stronger, impact-driven stories with clear metrics.

Modular building blocks

Users filled out each STAR element one at a time, breaking the process into manageable, low-friction steps.

AI-powered synthesis

Once complete, our AI combined the pieces into polished resume bullets tailored to the job. When users provided enough context, results were promising.

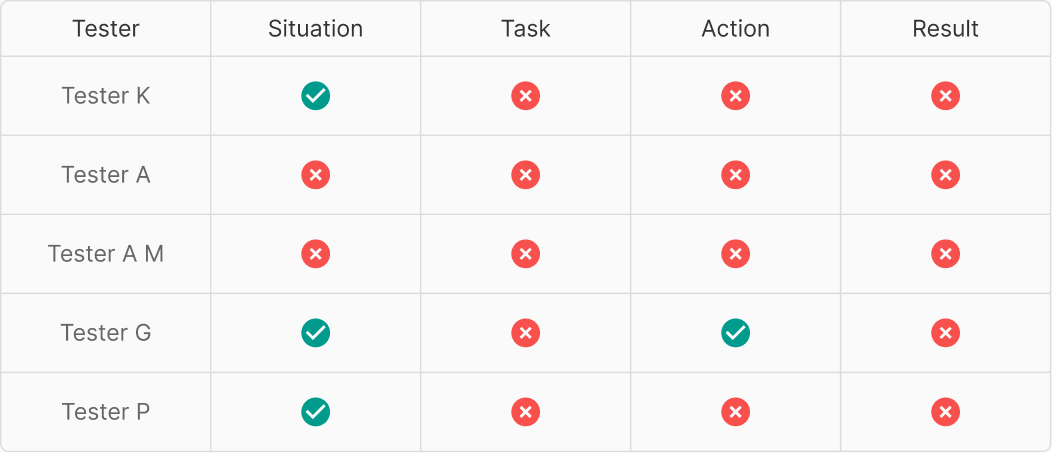

It didn’t land

What usability testing revealed

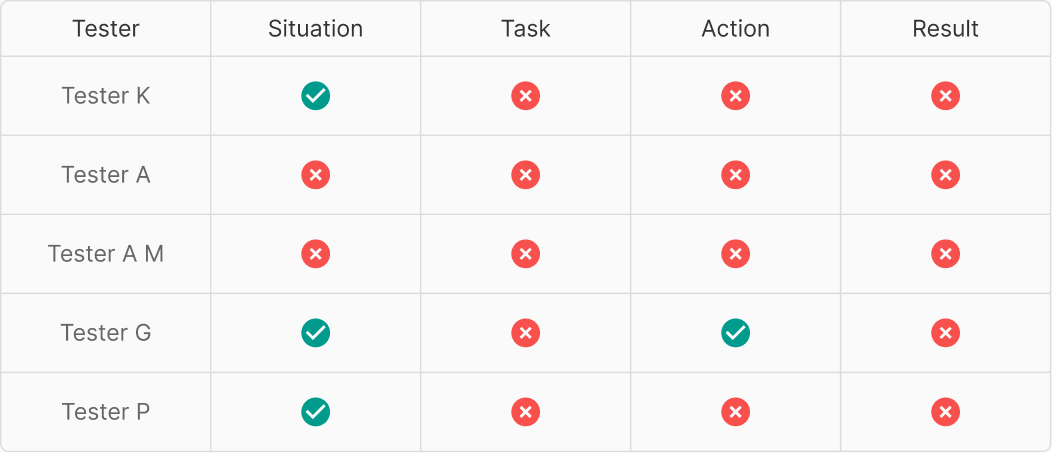

We conducted technical usability testing to evaluate how well users could complete STAR responses. Our benchmark: each response should take under 2 minutes with appropriate content.

01

Cognitive overload

Users took up to 4 minutes just to fill out one field. Instructions weren’t sticky, and many struggled to recall the right examples on the spot.

02

Skepticism

Many didn’t think their resumes were the issue. As a result, they distrusted the tool’s suggestions and saw little reason to engage deeply.

03

Effort to payoff

One STAR bullet took an average of 10 minutes. A complete resume with 9 bullets would take 90, far too long for hesitant users.

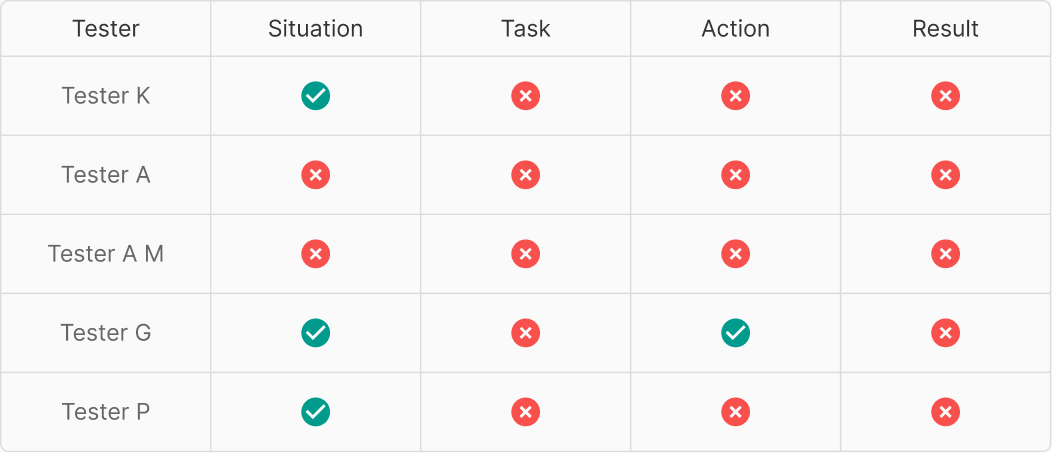

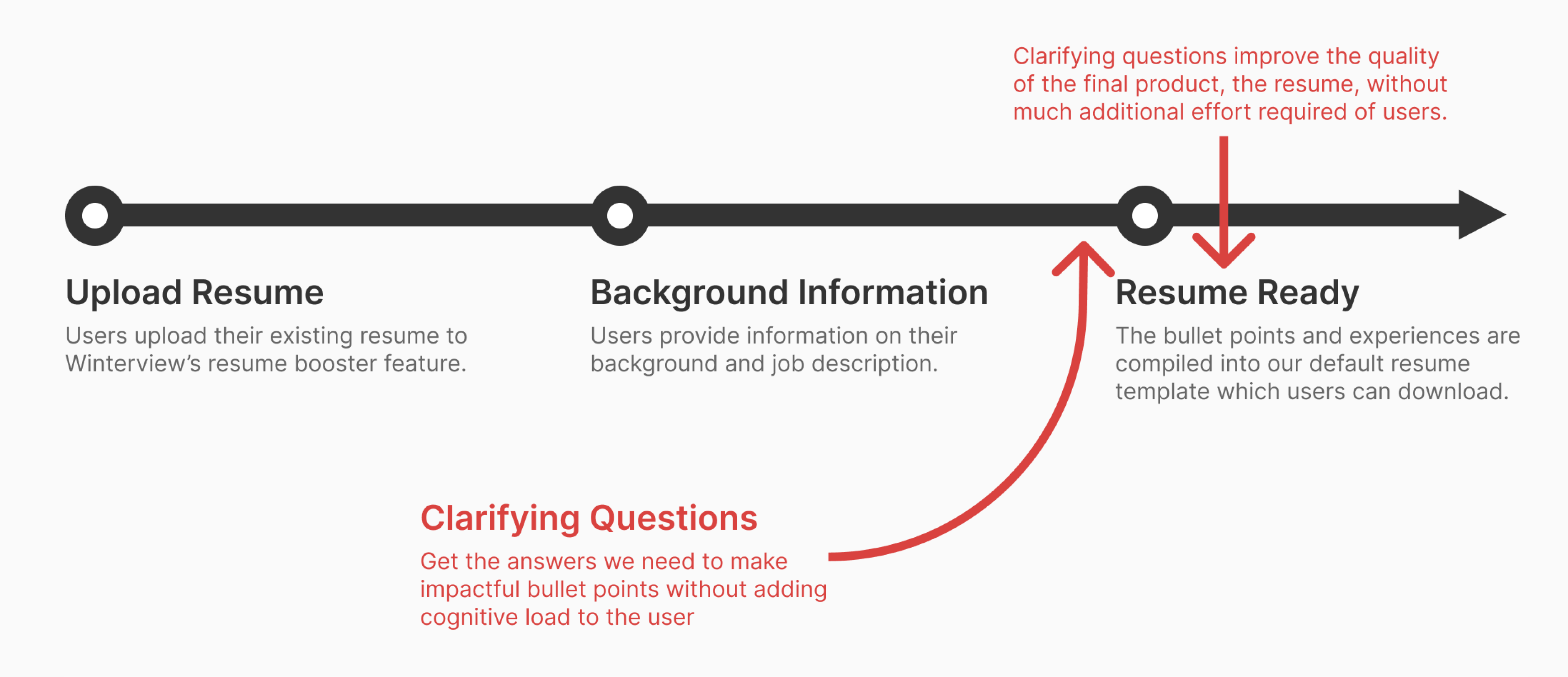

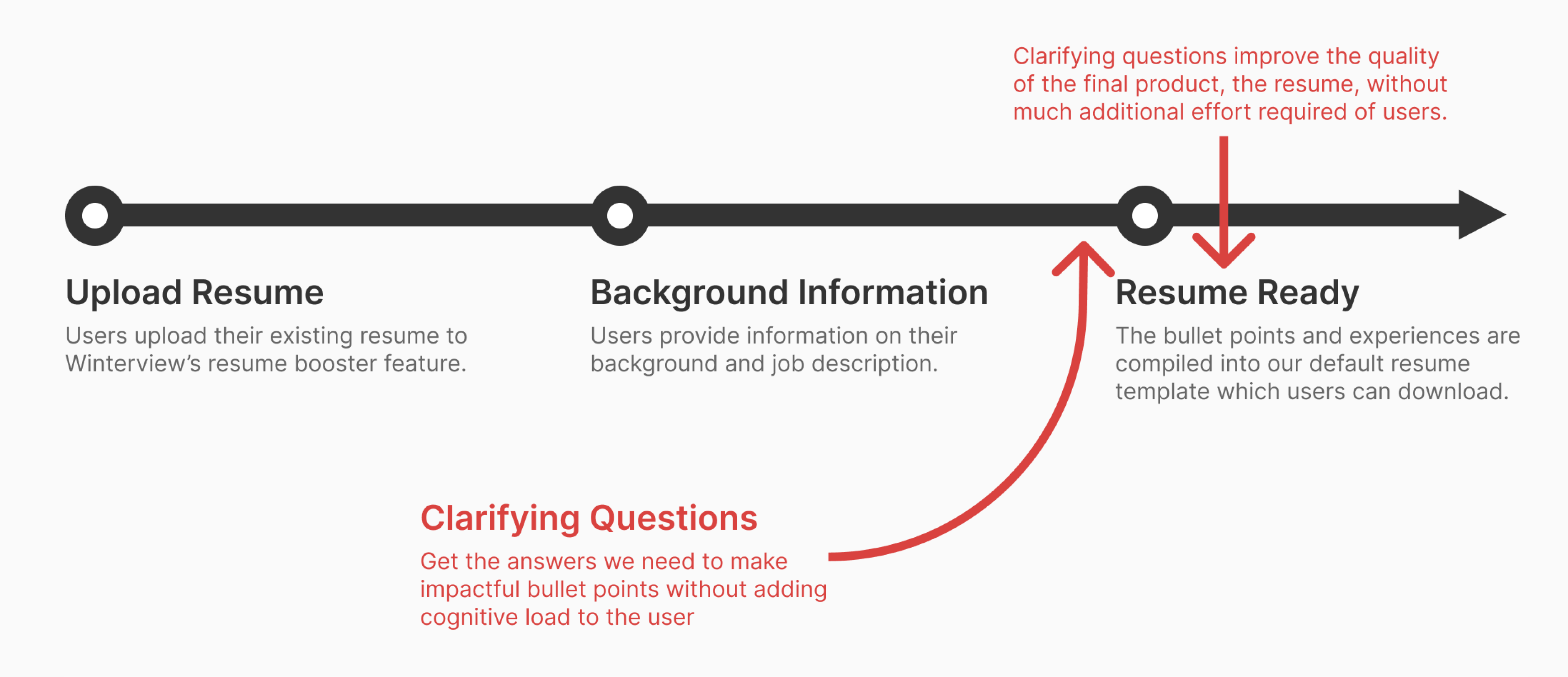

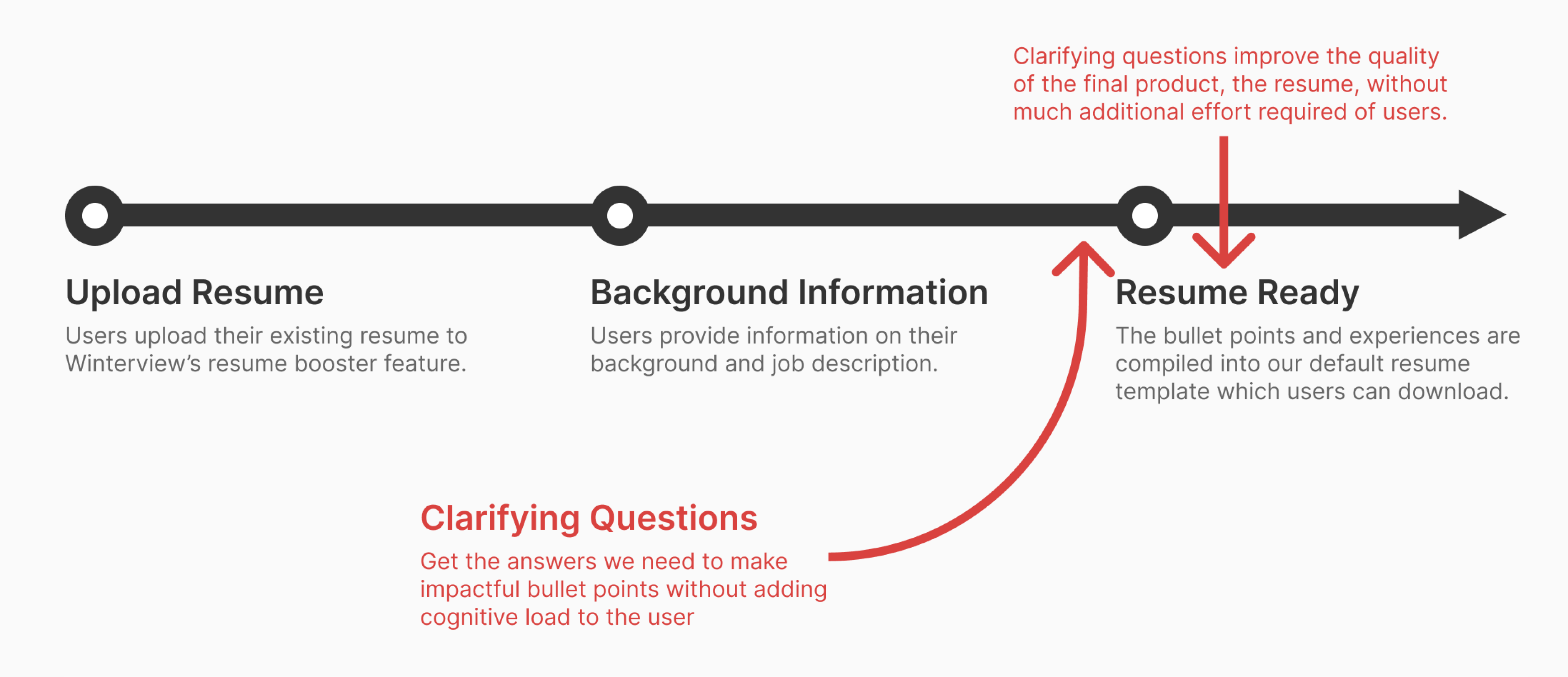

Clarifying Questions

Reduce errors. Lighten the lift.

We still wanted to guide users, but without overwhelming them. By reducing cognitive load, we made it easier for users to supply key information, minimizing the risk of incorrect or incomplete resumes.

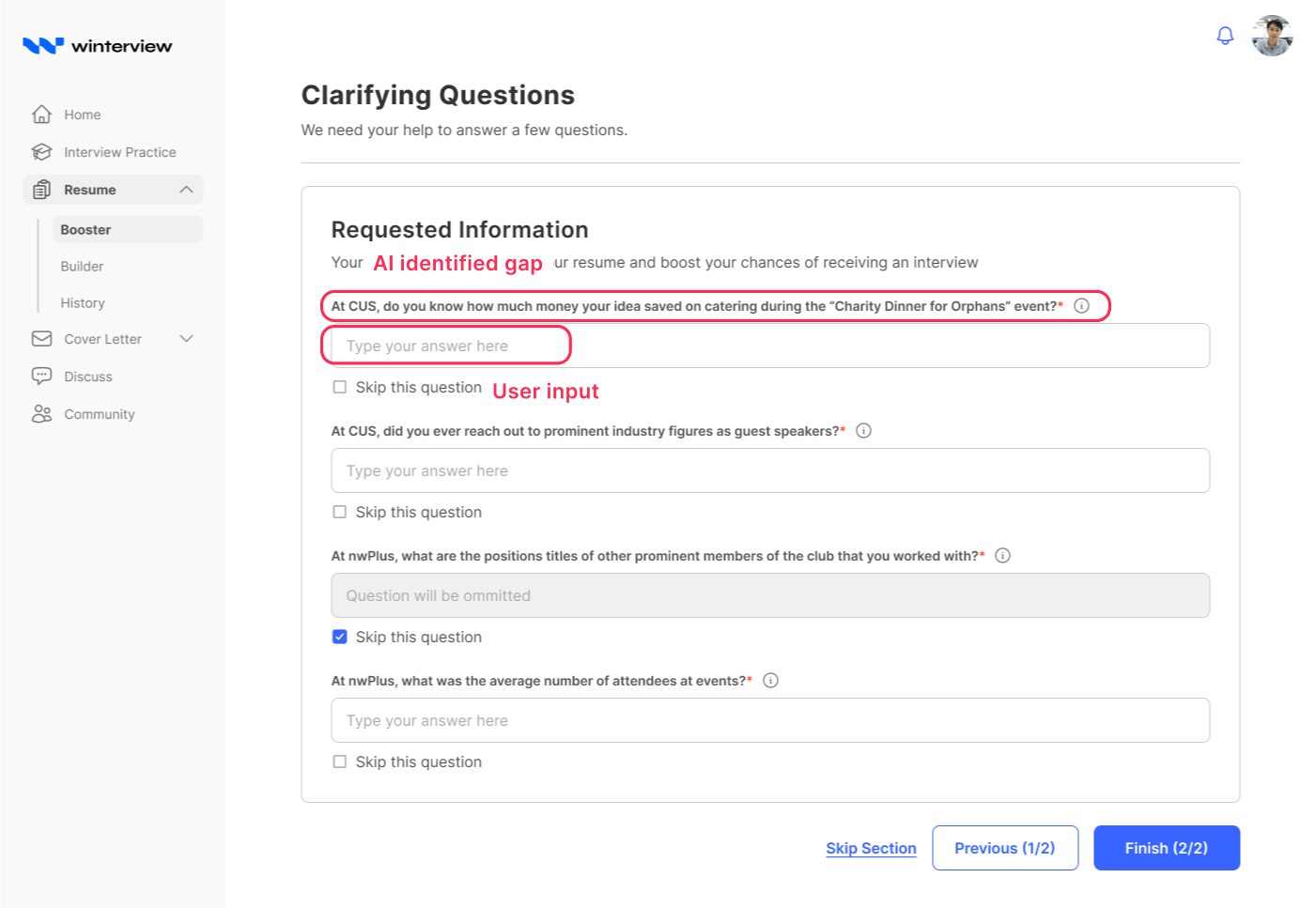

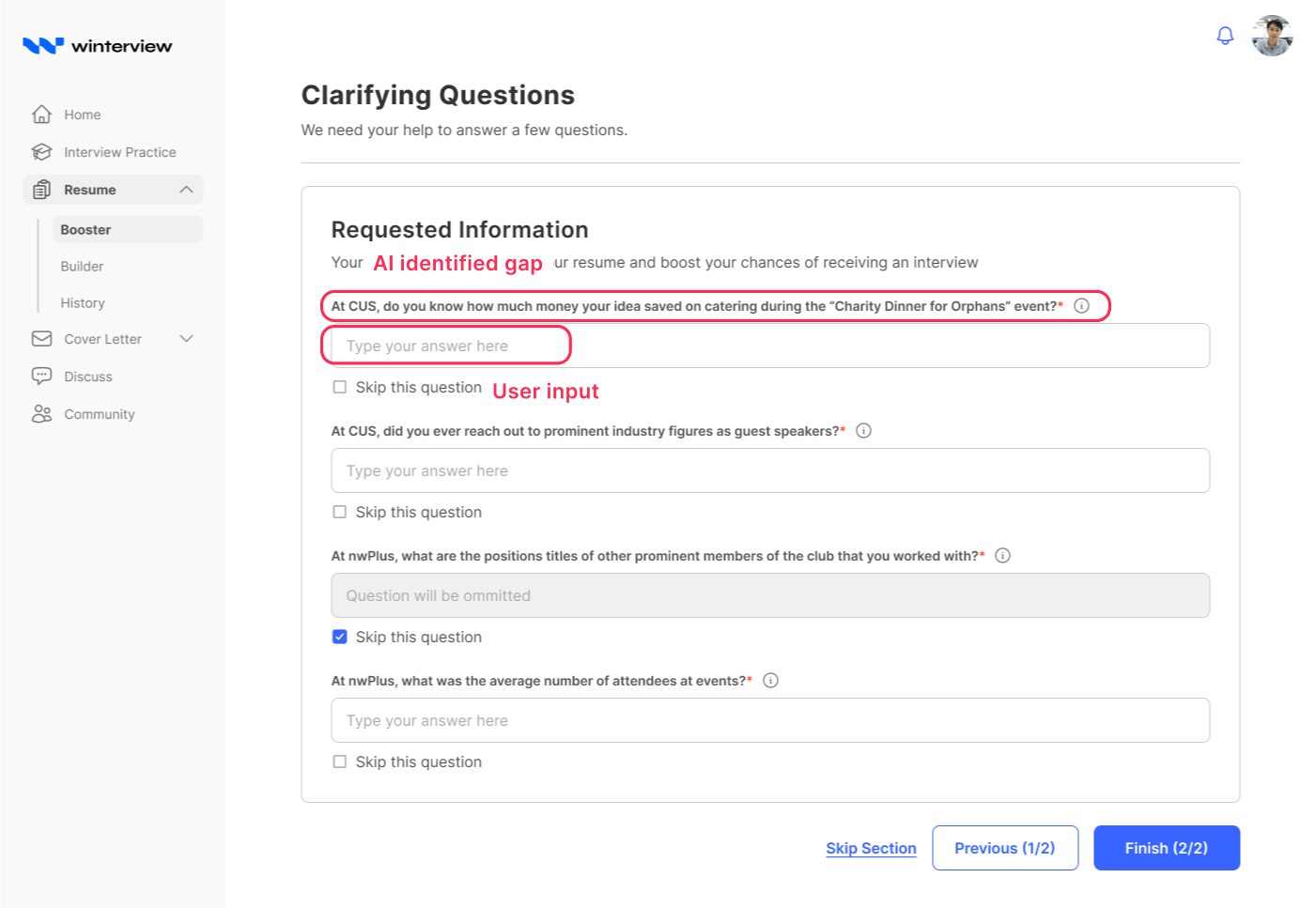

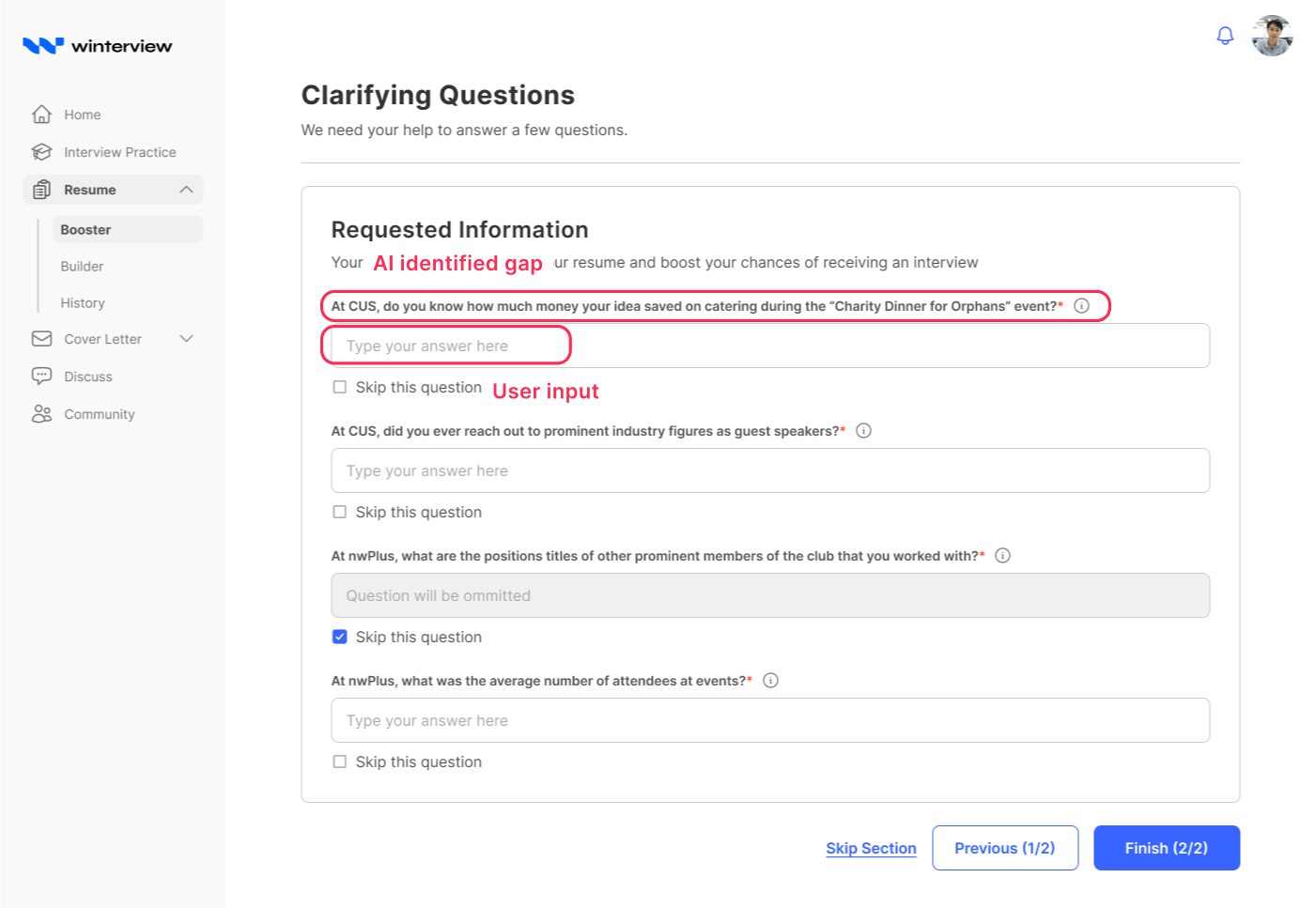

Filling in the blanks

We added a section of clarifying questions to the resume booster. These acted as helpful prompts to gather missing or overlooked details.

Direct line of questioning

Since users often skipped important data, we introduced direct questions like “What % did you improve X by?” to surface that info quickly and clearly.

Power user spoke up

We added support

As we tested the clarifying questions, we noticed that power users needed a smoother, faster experience. A few small quality-of-life changes went a long way.

01

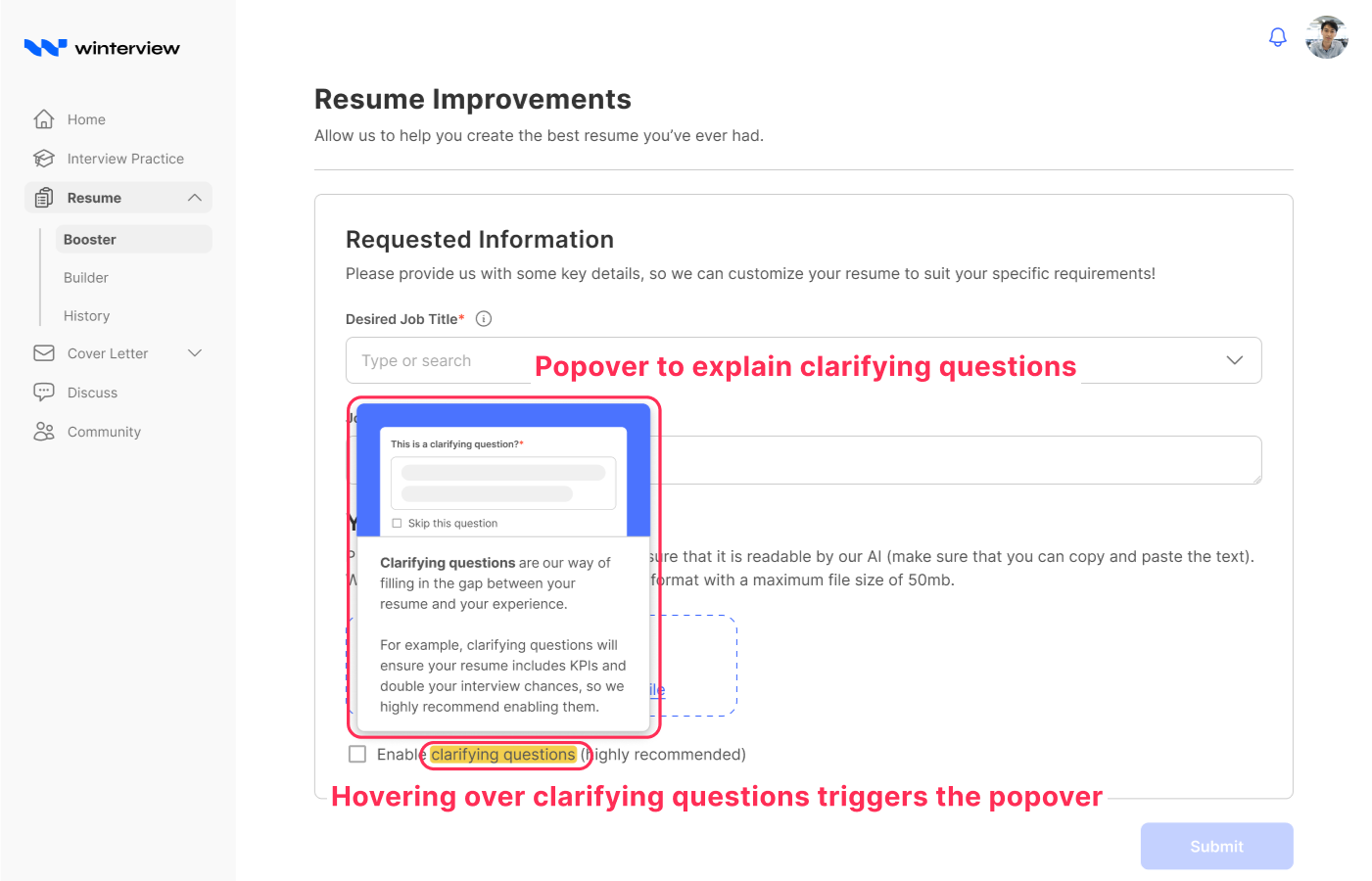

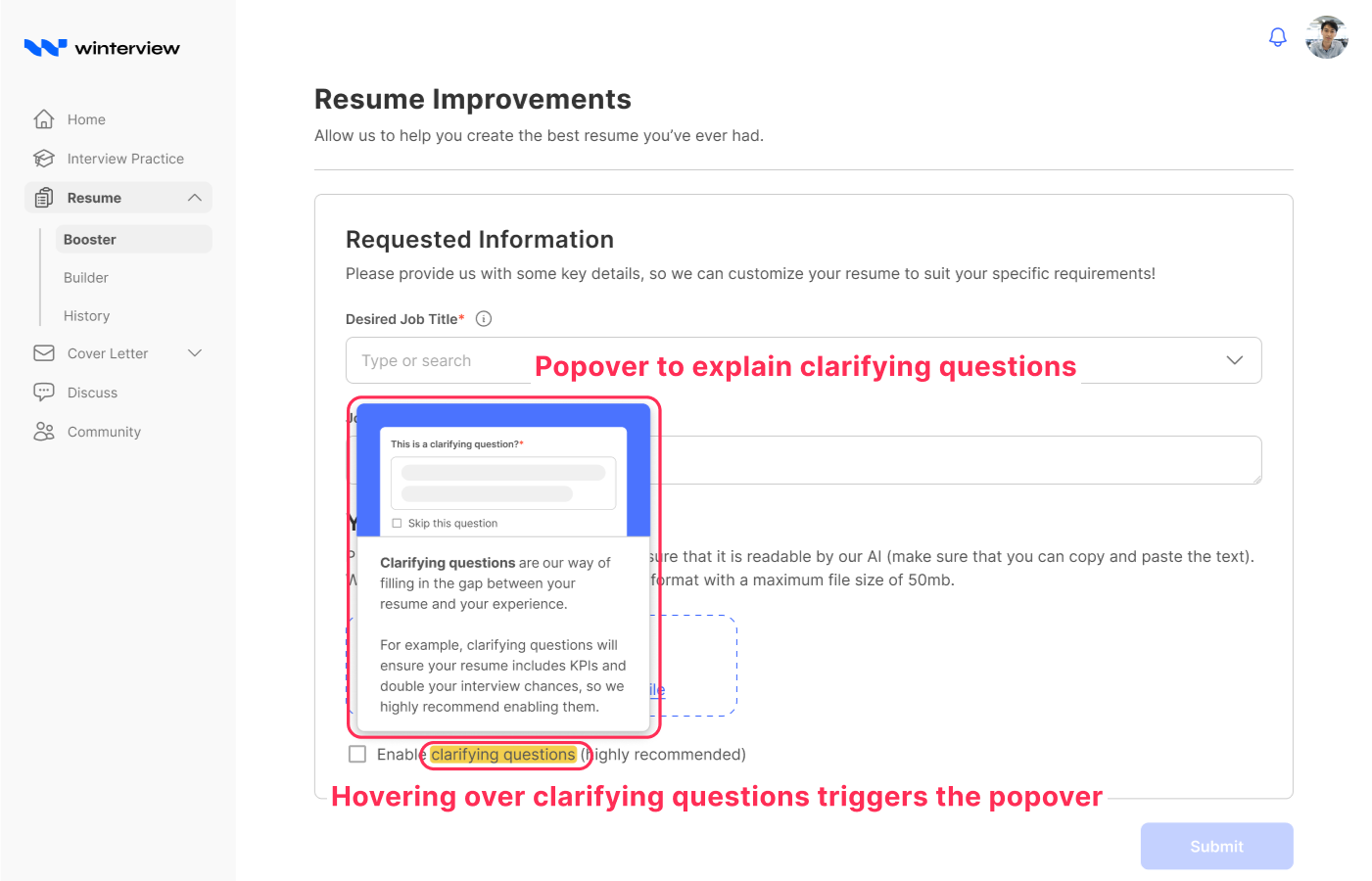

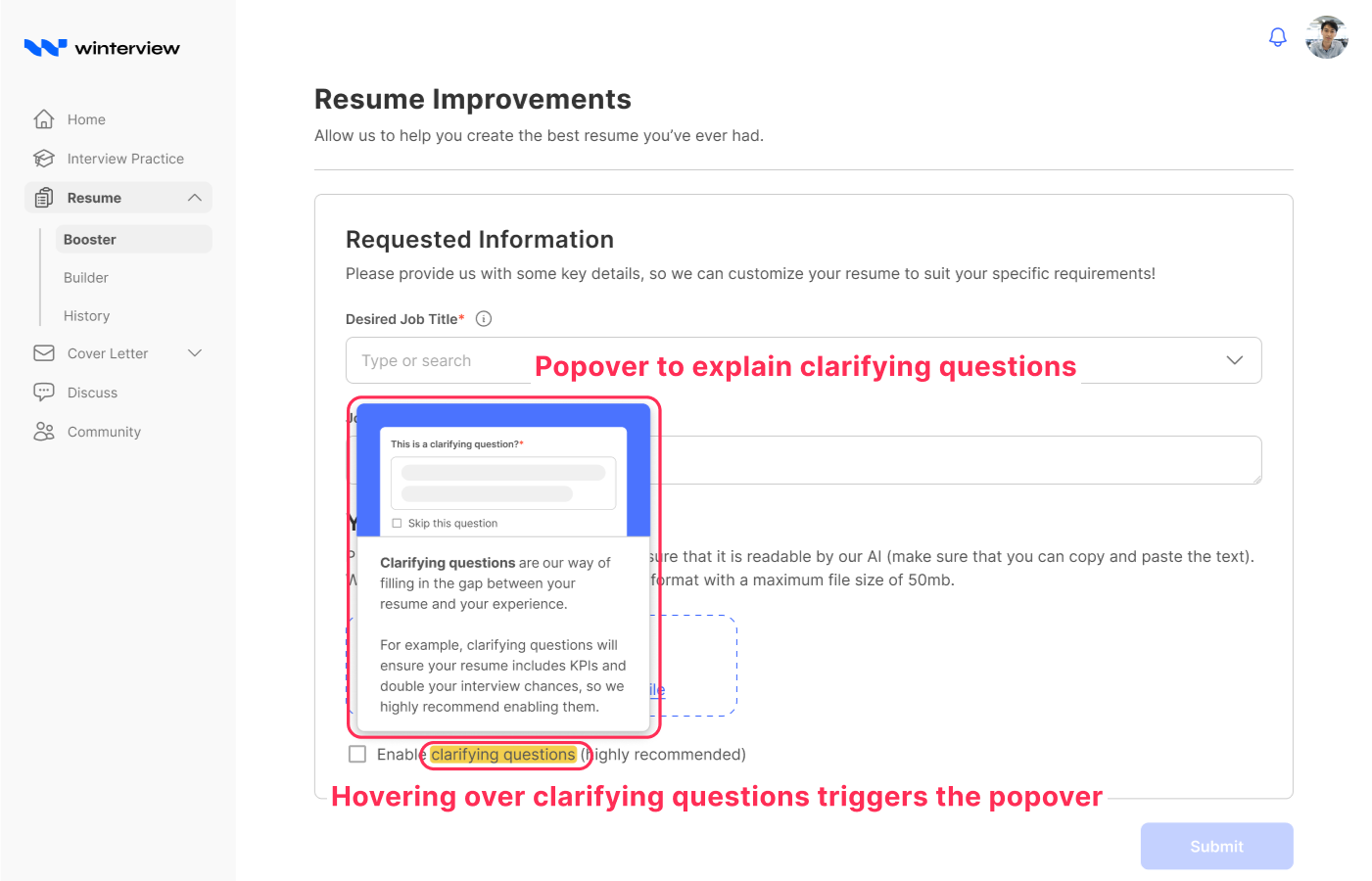

Addressing uncertainty

Early on, many users didn’t know what clarifying questions were or why they mattered. We added simple, in-context definitions to help them understand the purpose.

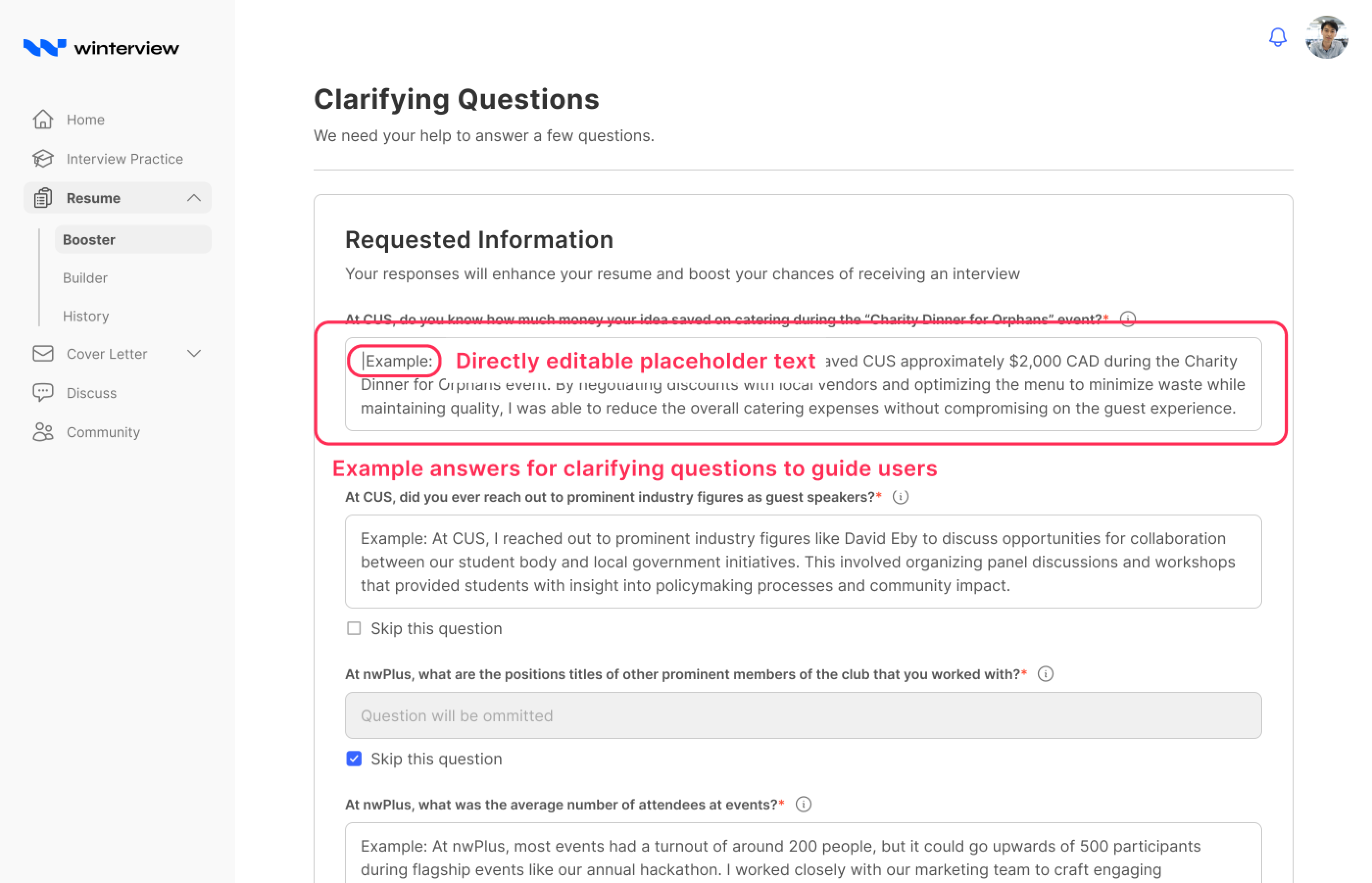

02

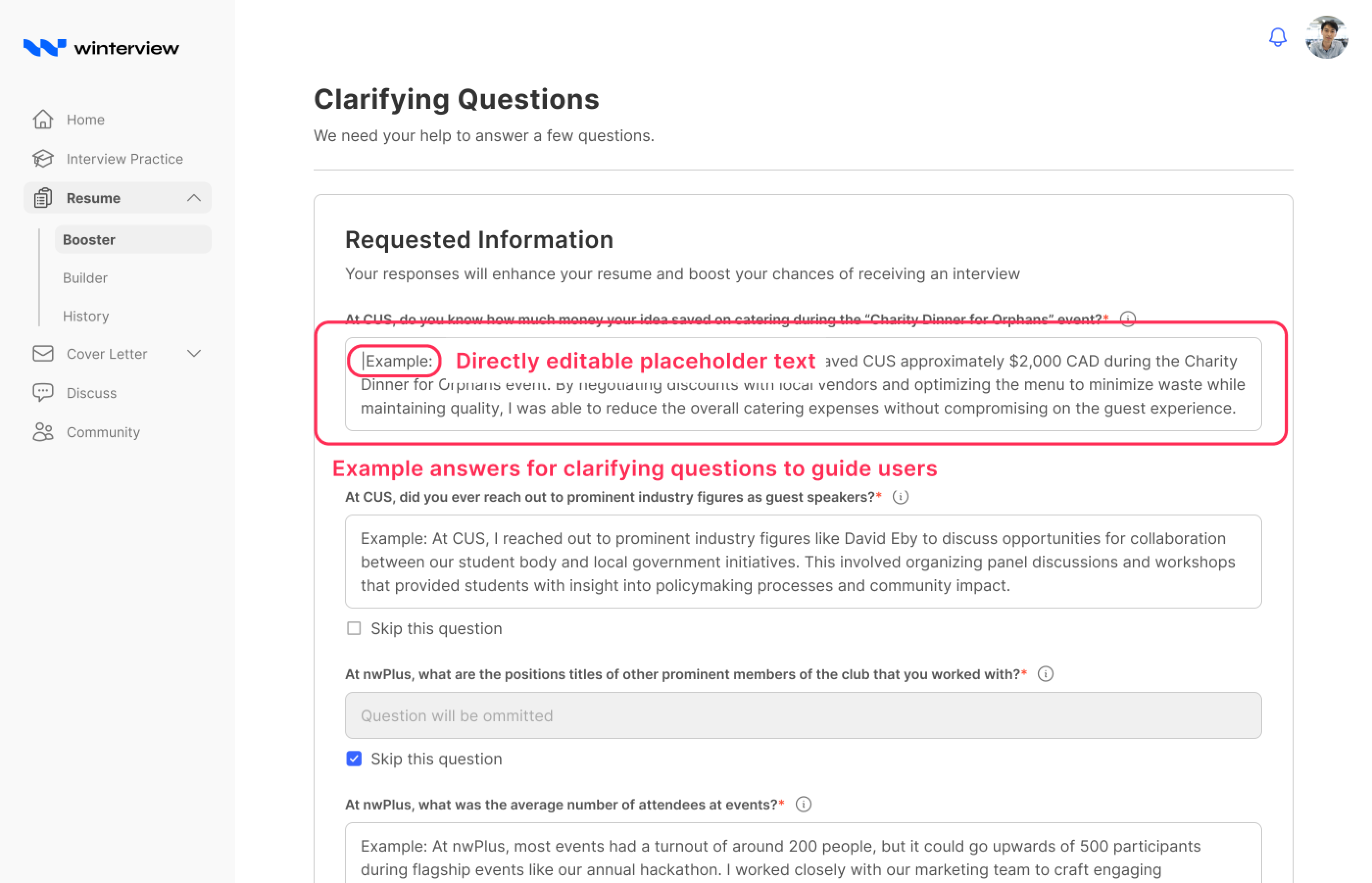

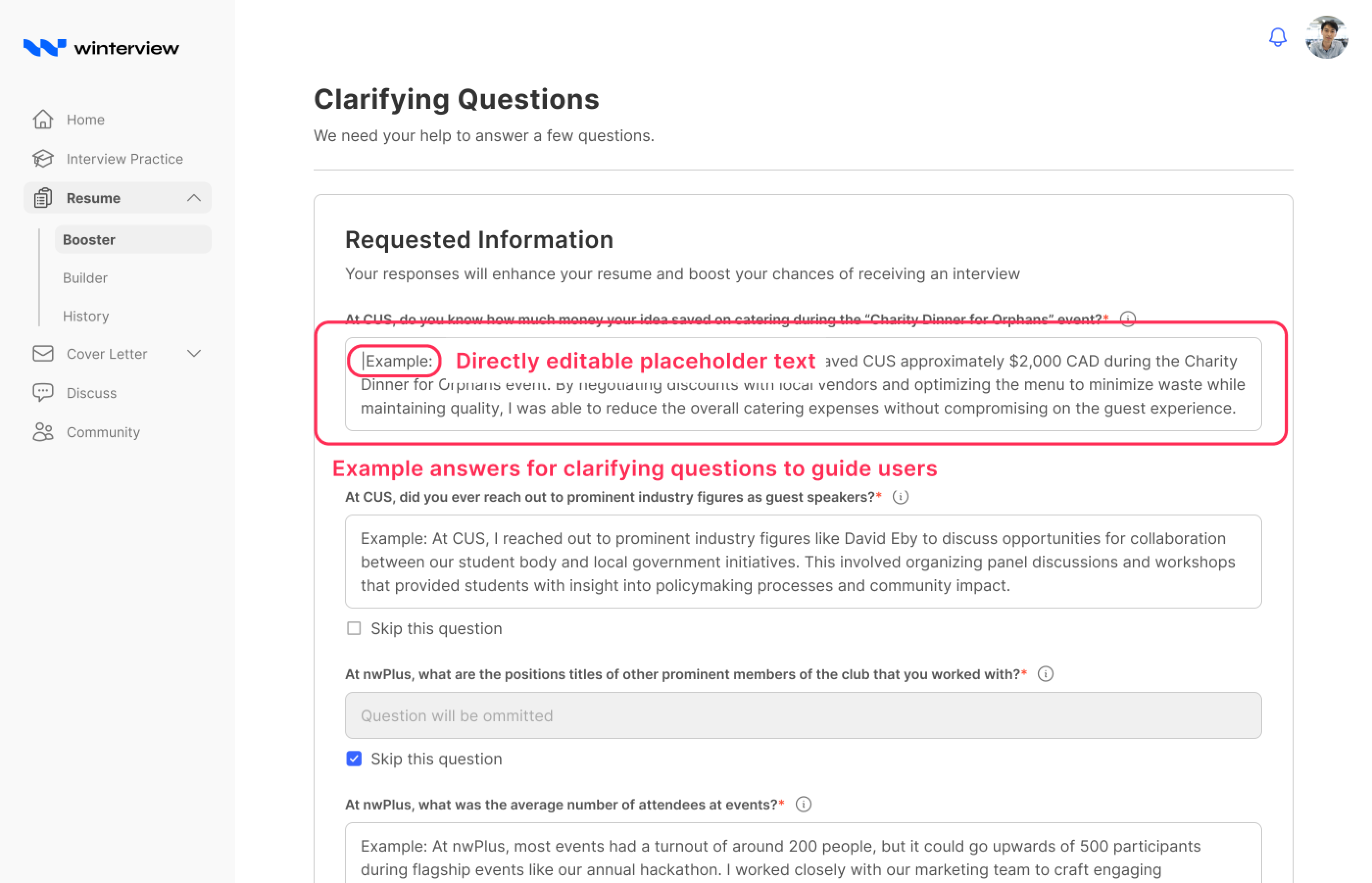

Reducing effort

For high-volume users (3+ resumes/day), answering clarifying questions became tedious. We introduced editable placeholder examples so they could skip the blank-page moment and move faster.

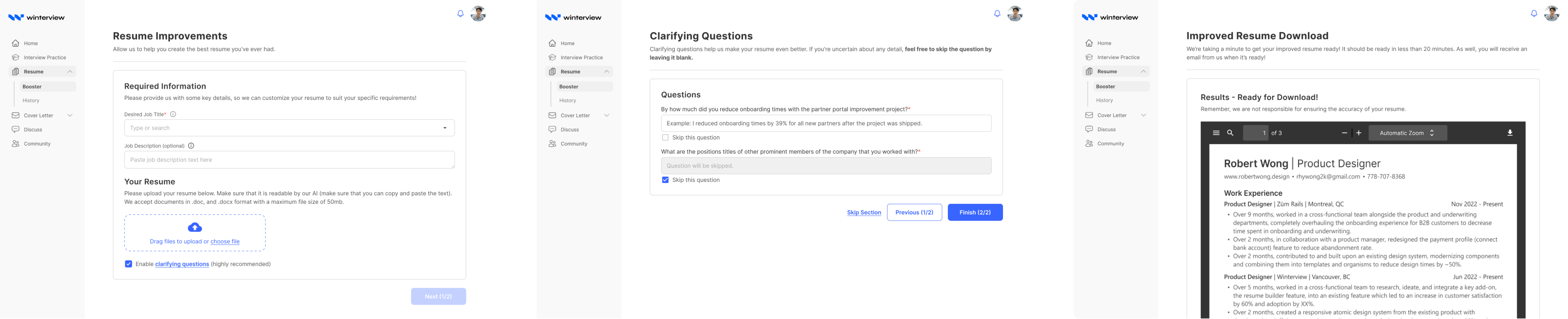

Faster, better, with no thinking required

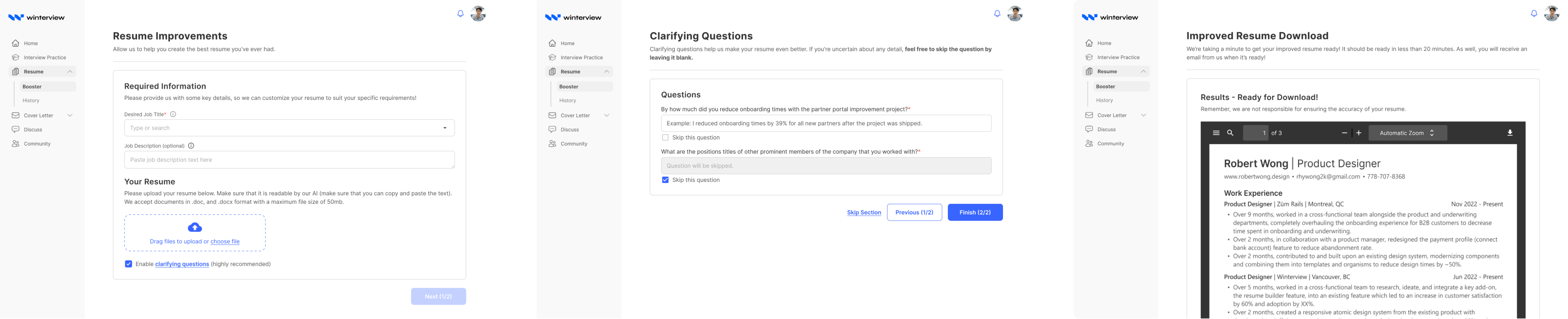

A solution users could trust instantly

After rounds of feedback and iteration, we shipped a streamlined version of the tool that focused on clarity, ease, and speed. We paused development on the resume builder due to high user effort and low ROI, with plans to revisit when the time is right.

The bottom line

Design changes drove real adoption

A few focused UX improvements led to measurable shifts in user behavior, satisfaction, and B2B outcomes.

34%

increase in adoption

After adding clarifying questions, the booster became our most-used feature (57% adoption total).

84%

CSAT

Among surveyed users (200+ surveyed after usage)

2x

feature adoption

More than twice the amount of users were now using the Resume Booster

Disclaimer: This case study represents my personal perspective on a design project I contributed to. My views are my own and do not necessarily reflect the views of Winterview Inc, its users, or its clients.